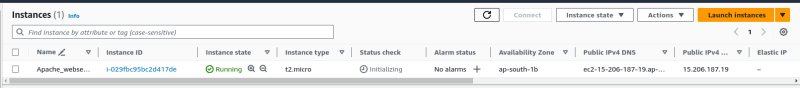

Create a traget machine to install Mysql server

Here I have created a MySQL target machine using VM (ubuntu 22.04)

Lets Install Mysql server

apt update

apt install mysql-server

systemctl start mysql

systemctl enable mysql

systemctl status mysql

Add prometheus user in prometheus group

useradd --no-create-home --shell /bin/false prometheus

groupadd --system prometheus

useradd -s /sbin/nologin --system -g prometheus prometheus

- Downloading latest Mysqld-exporter

curl -s https://api.github.com/repos/prometheus/mysqld_exporter/releases/latest | grep browser_download_url | grep linux-amd64 | cut -d '"' -f 4 | wget -qi -

- Extract the downloaded file

tar xvf mysqld_exporter*.tar.gz

root@mysql-2:~# tar xvf mysqld_exporter*.tar.gz

mysqld_exporter-0.15.0.linux-amd64/

mysqld_exporter-0.15.0.linux-amd64/mysqld_exporter

mysqld_exporter-0.15.0.linux-amd64/NOTICE

mysqld_exporter-0.15.0.linux-amd64/LICENSE

Move the mysqld-exporter to /usr/local/bin

mv mysqld_exporter-*.linux-amd64/mysqld_exporter /usr/local/bin/

- giving permission to mysqld-exporter

chmod +x /usr/local/bin/mysqld_exporter

- verify the mysqld-exporter version

mysqld_exporter --version

root@mysql-2:~# mysqld_exporter --version

mysqld_exporter, version 0.15.0 (branch: HEAD, revision: 6ca2a42f97f3403c7788ff4f374430aa267a6b6b)

build user: root@c4fca471a5b1

build date: 20230624-04:09:04

go version: go1.20.5

platform: linux/amd64

tags: netgo

- Creating MySQL user and DB for mysqld-exporter

CREATE USER 'mysqld_exporter'@'localhost' IDENTIFIED BY 'StrongPassword';

GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'mysqld_exporter'@'localhost';

root@mysql-2:~# mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 8

Server version: 8.0.35-0ubuntu0.22.04.1 (Ubuntu)

Copyright (c) 2000, 2023, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> CREATE USER 'mysqld_exporter'@'localhost' IDENTIFIED BY 'password';

Query OK, 0 rows affected (0.02 sec)

mysql> GRANT PROCESS, REPLICATION CLIENT, SELECT ON *.* TO 'mysqld_exporter'@'localhost';

Query OK, 0 rows affected (0.01 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> EXIT

Bye

- Configure MySQL DB credentials

vim /etc/.mysqld_exporter.cnf

root@mysql-2:~# cat /etc/.mysqld_exporter.cnf

[client]

user=mysqld_exporter

password=******

chown root:prometheus /etc/.mysqld_exporter.cnf

- Create systemmd unit file

vim /etc/systemd/system/mysql_exporter.service

root@mysql-2:~# cat /etc/systemd/system/mysql_exporter.service

[Unit]

Description=Prometheus MySQL Exporter

After=network.target

User=prometheus

Group=prometheus

[Service]

Type=simple

Restart=always

ExecStart=/usr/local/bin/mysqld_exporter \

--config.my-cnf /etc/.mysqld_exporter.cnf \

--collect.global_status \

--collect.info_schema.innodb_metrics \

--collect.auto_increment.columns \

--collect.info_schema.processlist \

--collect.binlog_size \

--collect.info_schema.tablestats \

--collect.global_variables \

--collect.info_schema.query_response_time \

--collect.info_schema.userstats \

--collect.info_schema.tables \

--collect.perf_schema.tablelocks \

--collect.perf_schema.file_events \

--collect.perf_schema.eventswaits \

--collect.perf_schema.indexiowaits \

--collect.perf_schema.tableiowaits \

--collect.slave_status \

--web.listen-address=0.0.0.0:9104

[Install]

WantedBy=multi-user.target

- Reload the daemon and start,enable,status of the service

systemctl daemon-reload

systemctl enable mysql_exporter

systemctl start mysql_exporter

systemctl status mysql_exporter

Already we have created a prometheus server machine and done with the installation of(prometheus,grafana,alertmanager,node-exporter)

Adding scrape config file to communicate with db

vim /etc/prometheus/prometheus.yml

- job_name: 'server1_db'

scrape_interval: 5s

static_configs:

- targets: ['server_ip:9104']

root@prometheus-2:~# cat etc/prometheus/prometheus.yml

cat: etc/prometheus/prometheus.yml: No such file or directory

root@prometheus-2:~# cat /etc/prometheus/prometheus.yml

global:

scrape_interval: 10s

scrape_configs:

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets: ['localhost:9090']

- job_name: 'prometheus_server'

scrape_interval: 5s

static_configs:

- targets: ['192.168.122.138:9100']

- job_name: 'server1_db'

scrape_interval: 5s

static_configs:

- targets: ['192.168.122.137:9104']

- Adding Alert rules for msqld-exporter

vim /etc/prometheus/rules/alert-rules.yml

alertmanager rules:

- alert: MysqlDown

expr: mysql_up == 0

for: 2m

labels:

severity: critical

annotations:

summary: MySQL down (instance {{ $labels.instance }})

description: "MySQL instance is down on {{ $labels.instance }}\n VALUE = {{ $value }}\n LABELS = {{ $labels }}"

- Restart and verify the status of all services (prometheus,grafana,node_exporter,alertmanager)

systemctl restart prometheus

systemctl status prometheus

systemctl restart grafana

systemctl status grafana

systemctl restart node_exporter

systemctl status node_exporter

systemctl restart alertmanager

systemctl status alertmanager

[(https://github.com/prometheus/mysqld_exporter/blob/main/mysqld-mixin/dashboards/mysql-overview.json#L3)]

- Copy the mysql-overview.jason file from the above link and paste under "import via dashboard JASON model"

- Name the Dashboard and keep time sync "every 5 minutes"

and the save the dashboard.

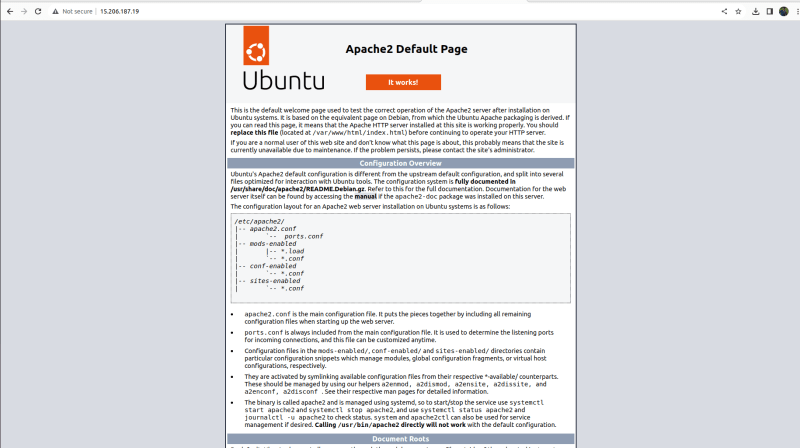

Now we able to Monitor the MySQL DB using Prometheus-Grafana/mysqld-exporter.